AI hallucinations are a significant challenge in the development and deployment of large language models (LLMs). These hallucinations occur when an AI generates information that is incorrect, nonsensical, or not grounded in reality. This is particularly concerning in applications that require high accuracy, such as healthcare, legal advice, or customer service. At PerfectApps, we address this challenge by leveraging advanced AI API integrations and Retrieval-Augmented Generation (RAG) systems, in order to create precise and reliable chatbots and applications.

What Causes AI Hallucinations?

AI hallucinations primarily stem from the limitations of training data and the probabilistic nature of AI text generation. However, these hallucinations also arise because AI models are designed to mimic human-like reasoning, which can sometimes lead to confidently incorrect outputs. Just as humans can sometimes speak with great confidence about things that are incorrect or misleading, AI models, trained to emulate human thought patterns, can produce similar errors.

Several factors contribute to AI hallucinations:

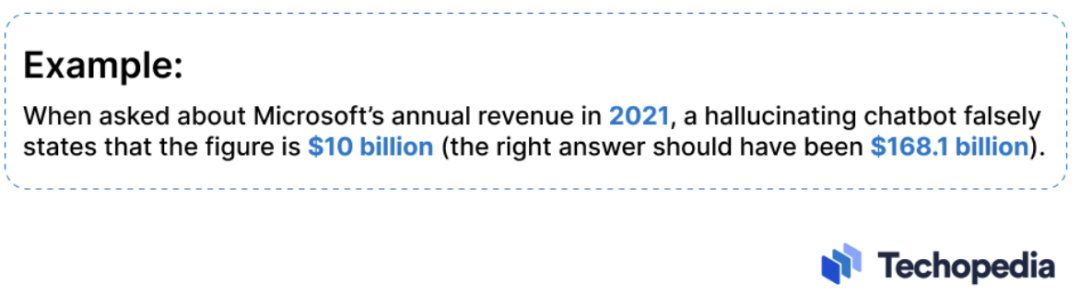

1. Insufficient or Low-Quality Training Data: AI models are only as good as the data they are trained on. If the data is outdated, insufficient, or low quality, the AI might generate responses based on limited or inaccurate information. For instance, if an AI is not trained on certain recent events, it might fill in gaps with incorrect or fabricated details.

2. Bad Data Retrieval: Modern AI tools often pull in additional data from external sources. However, they lack the ability to fact-check this data effectively, leading to absurd outputs, such as a case where an AI recommended putting glue on pizza.

3. Overfitting and Bias: When models are trained on a limited or biased dataset, they may overfit, leading to outputs that reflect these biases or repeat errors present in the data. This is why AI can sometimes echo misinformation or stereotypes.

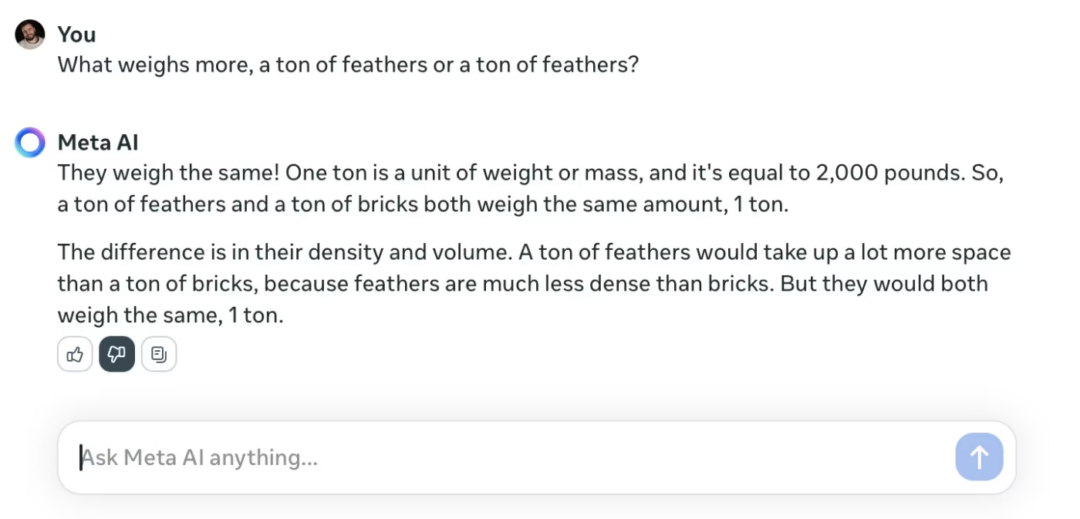

4. Ambiguous Prompts: Vague or poorly structured prompts can cause the AI to make incorrect assumptions, leading to hallucinations. For example, an AI might misinterpret a request for information and provide an irrelevant or incorrect response.

5. Human-like Reasoning Errors: Since AI is trained to mimic human brain functions, it can also produce human-like errors. For instance, just as a person might confidently state something incorrect due to a misunderstanding or a cognitive bias, AI can generate similar outputs. This intrinsic feature of AI means that while hallucinations can be minimized, they may never be entirely eliminated.

Examples of AI Hallucinations:

- Responds incorrectly to a prompt that it should be able to respond correctly to.

- Repeating errors, satire, or lies from other sources as if they were factual.

- Failing to provide necessary context or critical information, which can lead to harmful consequences.

For example, a legal chatbot might cite non-existent case law, or a customer service bot might mislead users about company policies, causing reputational and financial damage.

Mitigating AI Hallucinations: The Role of Temperature Settings

Temperature settings in AI models play a crucial role in controlling the randomness of outputs, influencing the likelihood of hallucinations. In PerfectApps, we use a temperature setting of 0.2 in our RAG systems to ensure that the AI’s responses are focused and deterministic—essential characteristics for business-critical applications.

- Low Temperature (e.g., 0.1 – 0.5): This leads to more repetitive and predictable text. The model strongly favors the most likely next word and is less influenced by the smaller nuances in the context.

- Medium Temperature (e.g., 0.5 – 1.0): There’s a balance between predictability and creativity. The model is more likely to produce coherent and contextually relevant text, but with some degree of variability.

- High Temperature (e.g., 1.0 – 2.0): Here, the model produces more diverse and creative text. However, this can also lead to less coherent or contextually irrelevant content, as the model is more likely to take risks in its word choices.

Experimenting with Temperature

Telling ChatGPT to generate a blog post title about dog food with a temperature of 0.1 yielded a more scientific and factual response:

“The Science of Sustenance: Exploring Nutrient-Rich Dog Food Formulas”

Repeating the prompt with a temperature of 0.9 resulted in a more creative but potentially hallucinated title:

“Drool-Worthy Doggie Dinners: A Gourmet Journey to Canine Cuisine”

Adjusting Temperature in Different LLMs

GPT-3, GPT-4, GPT-4o by OpenAI: Temperature can be set from 0 to 2, with 0.7 being the default. Lower temperatures (0.1-0.4) make the output more deterministic and focused, while higher temperatures (0.7-1.0+) increase creativity but also the risk of hallucinations.

DALL-E 2 by OpenAI: Temperature controls the diversity of the generated images. Higher temperatures (0.7-1.0) produce more varied and imaginative images, while lower temperatures (0.1-0.4) result in more realistic and recognizable outputs.

Whisper speech recognition model by OpenAI: Temperature affects the confidence threshold for transcription. Higher temperatures (0.7-1.0) make the model more willing to transcribe uncertain words, while lower temperatures (0.1-0.4) make it more conservative.

Claude by Anthropic: Temperature can be adjusted between 0 and 1, with 0.6 being the default. Lower temperatures (0.1-0.4) make the output more deterministic and reliable, while higher temperatures (0.6-1.0) increase diversity but also the risk of factual errors.

LLAMA by Meta: The temperature setting in LLAMA models, including LLAMA-2, ranges from 0 to 1, with the default set to 0.9. Lower temperatures (0.1-0.4) make the output more deterministic and focused, while higher temperatures (0.6-1.0) increase creativity but also the risk of hallucinations.

Grok by XAI: Grok, the open-source large language model developed by XAI, allows for adjusting the temperature parameter between 0 and 1, with a default setting that is not explicitly mentioned. This temperature setting plays a crucial role in controlling the randomness and diversity of the model’s outputs.

Mistral by Mistral AI: The temperature setting in Mistral models can range from 0 to 1.5, with a default value of 0.7. Lower temperatures (0.0-0.4) make the output more deterministic and focused, while higher temperatures (0.8-1.0) increase randomness and creativity.

Why Temperature Matters: The temperature setting is a crucial factor in determining the trade-off between creativity and accuracy. For business applications where precision and reliability are paramount, such as those managed through PerfectApps, a low temperature setting ensures that the AI remains focused on providing accurate, trustworthy responses. This, combined with our integration of RAG systems, helps to drastically reduce the occurrence of AI hallucinations.

Mitigating AI Hallucinations: Using the RAG System

Retrieval-Augmented Generation (RAG) is the cornerstone of our approach to minimizing AI hallucinations. By grounding AI responses in real-time, relevant information, RAG systems ensure that the outputs are both accurate and contextually appropriate. One of the key mechanisms in RAG is semantic search, where the AI retrieves information based on relevance scores. At PerfectApps, we recommend that only results with a score higher than 0.8 are considered for generating responses, effectively filtering out potentially misleading data.

This approach is further enhanced by using a temperature setting of 0.2, which makes the AI’s outputs focused, precise, and deterministic. The combination of RAG’s rigorous data grounding and low temperature settings results in AI responses that are reliable and exhibit very few hallucinations.

For a deeper dive into how RAG works and its benefits, you can read more in our dedicated blog post here.

Conclusion

AI hallucinations pose a significant challenge, but with the right strategies—such as careful tuning of temperature settings and the use of advanced techniques like RAG—these issues can be mitigated. While AI may never be completely free from hallucinations due to its human-like reasoning capabilities, PerfectApps is committed to providing the tools and integrations necessary to create precise, trustworthy AI-driven applications. This ensures that our users can harness the full potential of AI while minimizing risks.